Author: Jason Brownlee

The Stanford course on deep learning for computer vision is perhaps the most widely known course on the topic.

This is not surprising given that the course has been running for four years, is presented by top academics and researchers in the field, and the course lectures and notes are made freely available.

This is an incredible resource for students and deep learning practitioners alike.

In this post, you will discover a gentle introduction to this course that you can use to get a jump-start on computer vision with deep learning methods.

After reading this post, you will know:

- The breakdown of the course including who teaches it, how long it has been taught, and what it covers.

- The breakdown of the lectures in the course including the three lectures to focus on if you are already familiar with deep learning.

- A review of the course, including how it compares to similar courses on the same subject matter.

Let’s get started.

Overview

This tutorial is divided into three parts; they are:

- Course Breakdown

- Lecture Breakdown

- Discussion and Review

Course Breakdown

The course CS231n is a computer science course on computer vision with neural networks titled “Convolutional Neural Networks for Visual Recognition” and taught at Stanford University in the School of Engineering

This course is famous for being both early (started in 2015 just three years after the AlexNet breakthrough), and for being free, with videos and slides available.

The course was also popularized by interesting experiments created by Andrej Karpathy, such as demonstrations of neural networks on computer vision problems in Javascript (ConvNetJS).

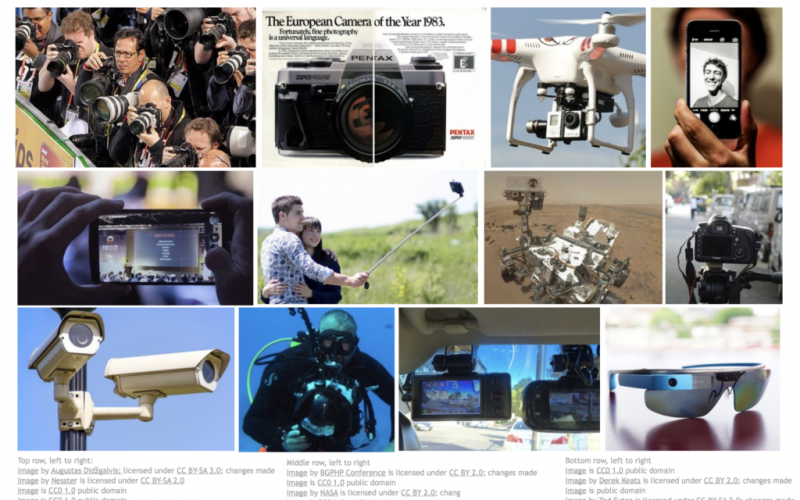

Example From the Introductory Lecture to the CS231n Course

At the time of writing, this course has run for four years and most of the content from each of those years is still available:

- 2015: Homepage, Syllabus

- 2016: Homepage, Syllabus, Videos

- 2017: Homepage, Syllabus, Videos

- 2018: Homepage, Syllabus

The course is taught by Fei-Fei Li, a famous computer vision researcher at the Stanford Vision Lab and more recently as a Chief Scientist at Google. Through 2015-16, the course was co-taught by Andrej Karpathy, now at Tesla. Justin Johnson has also been involved since the beginning and has co-taught with Serena Yeung through 2017 to 2018.

The focus of the course is the use of convolutional neural networks (CNNs) for computer vision problems, with a focus on how CNNs work, image classification and recognition tasks, and introduction to advanced applications such as generative models and deep reinforcement learning.

This course is a deep dive into details of the deep learning architectures with a focus on learning end-to-end models for these tasks, particularly image classification.

— CS231n: Convolutional Neural Networks for Visual Recognition

Lecture Breakdown

At the time of writing, the 2018 videos are not available publicly, but the 2017 videos are.

As such, we will focus on the 2017 syllabus and video content.

The course is divided into 16 lectures, with 14 covering topics in the course, two guest lectures on advanced topics, and a final video on student talks that is not public.

The full list of videos with links to each is provided below:

- Lecture 1: Introduction to Convolutional Neural Networks for Visual Recognition

- Lecture 2: Image Classification

- Lecture 3: Loss Functions and Optimization

- Lecture 4: Introduction to Neural Networks

- Lecture 5: Convolutional Neural Networks

- Lecture 6: Training Neural Networks, part I

- Lecture 7: Training Neural Networks, part II

- Lecture 8: Deep Learning Software

- Lecture 9: CNN Architectures

- Lecture 10: Recurrent Neural Networks

- Lecture 11: Detection and Segmentation

- Lecture 12: Visualizing and Understanding

- Lecture 13: Generative Models

- Lecture 14: Deep Reinforcement Learning

- Lecture 15:

- Lecture 16: Student spotlight talks, conclusions

- NO VIDEO

Do not overlook the Course Syllabus webpage. It includes valuable material such as:

- Links to PDF slides from the lectures that you can have open in a separate browser tab.

- Links to the papers discussed in the lecture, which are often required reading.

- Links to HTML notes pages that include elaborated descriptions of methods and sample code.

Example of HTML Notes Available via the Course Syllabus

Must-Watch Lectures for Experienced Practitioners

Perhaps you are already familiar with the basics of neural networks and deep learning.

In that case, you do not need to watch all the lectures if you want a crash course in techniques for computer vision.

A cut-down, must-watch list of lectures is as follows:

- Lecture 5: Convolutional Neural Networks. This lecture will get you up to speed with CNN layers and how they work.

- Lecture 9: CNN Architectures. This lecture will get you up to speed with popular network architectures for image classification.

- Lecture 11: Detection and Segmentation. This lecture will get you up to speed with image classification and object recognition tasks.

That is the minimum set.

You can add three more lectures to get a little more; they are:

- Lecture 12: Visualizing and Understanding.

- This lecture describes ways of understanding what a fit model sees or has learned.

- Lecture 13: Generative Models.

- This lecture provides an introduction to VAEs and GANs and modern image synthesis methods.

- Lecture 14: Deep Reinforcement Learning.

- This lecture provides a crash course into deep reinforcement learning methods.

Discussion and Review

I have watched all the videos of this course, I think for each year it is made available.

Most recently, I watched all the lectures for the 2017 version of the course over two days (two mornings on 2x speed) and took extensive notes. I recommend this approach, even if you are an experienced deep learning practitioner.

I recommend this approach for a few reasons:

- The field of deep learning is changing rapidly.

- Stanford is a center of innovation and excellence in the field (e.g. the vision lab).

- Repetition of the basics leads to new ideas and insights.

Nevertheless, if you want to get up to speed fast with deep learning for computer vision, the three lectures suggested in the previous section are the way to go (e.g. lectures 5, 9, and 11).

The course has a blistering pace.

It expects you to keep up, and if you don’t get something, it’s up to you to pause and go off and figure it out.

This is fair enough, the course is at Stanford after all, but it is less friendly than other courses, most notably Andrew Ng’s DeepLearning.ai convolutional neural networks course.

As such, I do not recommend this course if you need some hand-holding; take the other course as it was designed for developers, not Stanford students.

That being said, you are hearing about how CNNs and modern methods work from top academics and grad students in the world, and that is invaluable.

The fact that the videos are made freely available is a unique opportunity for practitioners.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

- CS231n: Convolutional Neural Networks for Visual Recognition, 2018.

- CS231n: Convolutional Neural Networks for Visual Recognition, 2017.

- CS231n Schedule and Syllabus, 2017.

- Stanford University CS231n, Spring 2017, YouTube Playlist

- Fei-Fei Li Homepage

- Andrej Karpathy Homepage

- Justin Johnson Homepage

- Serena Yeung Homepage

- Notes and assignments for Stanford CS class, GitHub

- r/cs231n Subreddit

- ConvNetJS

Summary

In this post, you discovered a gentle introduction to this course that you can use to get a jump-start on computer vision with deep learning methods.

Specifically, you learned:

- The breakdown of the course including who teaches it, how long it has been taught, and what it covers.

- The breakdown of the lectures in the course, including the three lectures to focus on if you are already familiar with deep learning.

- A review of the course including how it compares to similar courses on the same subject matter.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

The post Stanford Convolutional Neural Networks for Visual Recognition Course (Review) appeared first on Machine Learning Mastery.