Author: Jason Brownlee

Predictive modeling with deep learning is a skill that modern developers need to know.

PyTorch is the premier open-source deep learning framework developed and maintained by Facebook.

At its core, PyTorch is a mathematical library that allows you to perform efficient computation and automatic differentiation on graph-based models. Achieving this directly is challenging, although thankfully, the modern PyTorch API provides classes and idioms that allow you to easily develop a suite of deep learning models.

In this tutorial, you will discover a step-by-step guide to developing deep learning models in PyTorch.

After completing this tutorial, you will know:

- The difference between Torch and PyTorch and how to install and confirm PyTorch is working.

- The five-step life-cycle of PyTorch models and how to define, fit, and evaluate models.

- How to develop PyTorch deep learning models for regression, classification, and predictive modeling tasks.

Let’s get started.

PyTorch Tutorial – How to Develop Deep Learning Models

Photo by Dimitry B., some rights reserved.

PyTorch Tutorial Overview

The focus of this tutorial is on using the PyTorch API for common deep learning model development tasks; we will not be diving into the math and theory of deep learning. For that, I recommend starting with this excellent book.

The best way to learn deep learning in python is by doing. Dive in. You can circle back for more theory later.

I have designed each code example to use best practices and to be standalone so that you can copy and paste it directly into your project and adapt it to your specific needs. This will give you a massive head start over trying to figure out the API from official documentation alone.

It is a large tutorial, and as such, it is divided into three parts; they are:

- How to Install PyTorch

- What Are Torch and PyTorch?

- How to Install PyTorch

- How to Confirm PyTorch Is Installed

- PyTorch Deep Learning Model Life-Cycle

- Step 1: Prepare the Data

- Step 2: Define the Model

- Step 3: Train the Model

- Step 4: Evaluate the Model

- Step 5: Make Predictions

- How to Develop PyTorch Deep Learning Models

- How to Develop an MLP for Binary Classification

- How to Develop an MLP for Multiclass Classification

- How to Develop an MLP for Regression

- How to Develop a CNN for Image Classification

You Can Do Deep Learning in Python!

Work through this tutorial. It will take you 60 minutes, max!

You do not need to understand everything (at least not right now). Your goal is to run through the tutorial end-to-end and get a result. You do not need to understand everything on the first pass. List down your questions as you go. Make heavy use of the API documentation to learn about all of the functions that you’re using.

You do not need to know the math first. Math is a compact way of describing how algorithms work, specifically tools from linear algebra, probability, and calculus. These are not the only tools that you can use to learn how algorithms work. You can also use code and explore algorithm behavior with different inputs and outputs. Knowing the math will not tell you what algorithm to choose or how to best configure it. You can only discover that through carefully controlled experiments.

You do not need to know how the algorithms work. It is important to know about the limitations and how to configure deep learning algorithms. But learning about algorithms can come later. You need to build up this algorithm knowledge slowly over a long period of time. Today, start by getting comfortable with the platform.

You do not need to be a Python programmer. The syntax of the Python language can be intuitive if you are new to it. Just like other languages, focus on function calls (e.g. function()) and assignments (e.g. a = “b”). This will get you most of the way. You are a developer; you know how to pick up the basics of a language really fast. Just get started and dive into the details later.

You do not need to be a deep learning expert. You can learn about the benefits and limitations of various algorithms later, and there are plenty of tutorials that you can read to brush up on the steps of a deep learning project.

1. How to Install PyTorch

In this section, you will discover what PyTorch is, how to install it, and how to confirm that it is installed correctly.

1.1. What Are Torch and PyTorch?

PyTorch is an open-source Python library for deep learning developed and maintained by Facebook.

The project started in 2016 and quickly became a popular framework among developers and researchers.

Torch (Torch7) is an open-source project for deep learning written in C and generally used via the Lua interface. It was a precursor project to PyTorch and is no longer actively developed. PyTorch includes “Torch” in the name, acknowledging the prior torch library with the “Py” prefix indicating the Python focus of the new project.

The PyTorch API is simple and flexible, making it a favorite for academics and researchers in the development of new deep learning models and applications. The extensive use has led to many extensions for specific applications (such as text, computer vision, and audio data), and may pre-trained models that can be used directly. As such, it may be the most popular library used by academics.

The flexibility of PyTorch comes at the cost of ease of use, especially for beginners, as compared to simpler interfaces like Keras. The choice to use PyTorch instead of Keras gives up some ease of use, a slightly steeper learning curve, and more code for more flexibility, and perhaps a more vibrant academic community.

1.2. How to Install PyTorch

Before installing PyTorch, ensure that you have Python installed, such as Python 3.6 or higher.

If you don’t have Python installed, you can install it using Anaconda. This tutorial will show you how:

There are many ways to install the PyTorch open-source deep learning library.

The most common, and perhaps simplest, way to install PyTorch on your workstation is by using pip.

For example, on the command line, you can type:

sudo pip install torch

Perhaps the most popular application of deep learning is for computer vision, and the PyTorch computer vision package is called “torchvision.”

Installing torchvision is also highly recommended and it can be installed as follows:

sudo pip install torchvision

If you prefer to use an installation method more specific to your platform or package manager, you can see a complete list of installation instructions here:

There is no need to set up the GPU now.

All examples in this tutorial will work just fine on a modern CPU. If you want to configure PyTorch for your GPU, you can do that after completing this tutorial. Don’t get distracted!

1.3. How to Confirm PyTorch Is Installed

Once PyTorch is installed, it is important to confirm that the library was installed successfully and that you can start using it.

Don’t skip this step.

If PyTorch is not installed correctly or raises an error on this step, you won’t be able to run the examples later.

Create a new file called versions.py and copy and paste the following code into the file.

# check pytorch version import torch print(torch.__version__)

Save the file, then open your command line and change directory to where you saved the file.

Then type:

python versions.py

You should then see output like the following:

1.3.1

This confirms that PyTorch is installed correctly and that we are all using the same version.

This also shows you how to run a Python script from the command line. I recommend running all code from the command line in this manner, and not from a notebook or an IDE.

2. PyTorch Deep Learning Model Life-Cycle

In this section, you will discover the life-cycle for a deep learning model and the PyTorch API that you can use to define models.

A model has a life-cycle, and this very simple knowledge provides the backbone for both modeling a dataset and understanding the PyTorch API.

The five steps in the life-cycle are as follows:

- 1. Prepare the Data.

- 2. Define the Model.

- 3. Train the Model.

- 4. Evaluate the Model.

- 5. Make Predictions.

Let’s take a closer look at each step in turn.

Note: There are many ways to achieve each of these steps using the PyTorch API, although I have aimed to show you the simplest, or most common, or most idiomatic.

If you discover a better approach, let me know in the comments below.

Step 1: Prepare the Data

The first step is to load and prepare your data.

Neural network models require numerical input data and numerical output data.

You can use standard Python libraries to load and prepare tabular data, like CSV files. For example, Pandas can be used to load your CSV file, and tools from scikit-learn can be used to encode categorical data, such as class labels.

PyTorch provides the Dataset class that you can extend and customize to load your dataset.

For example, the constructor of your dataset object can load your data file (e.g. a CSV file). You can then override the __len__() function that can be used to get the length of the dataset (number of rows or samples), and the __getitem__() function that is used to get a specific sample by index.

When loading your dataset, you can also perform any required transforms, such as scaling or encoding.

A skeleton of a custom Dataset class is provided below.

# dataset definition

class CSVDataset(Dataset):

# load the dataset

def __init__(self, path):

# store the inputs and outputs

self.X = ...

self.y = ...

# number of rows in the dataset

def __len__(self):

return len(self.X)

# get a row at an index

def __getitem__(self, idx):

return [self.X[idx], self.y[idx]]

Once loaded, PyTorch provides the DataLoader class to navigate a Dataset instance during the training and evaluation of your model.

A DataLoader instance can be created for the training dataset, test dataset, and even a validation dataset.

The random_split() function can be used to split a dataset into train and test sets. Once split, a selection of rows from the Dataset can be provided to a DataLoader, along with the batch size and whether the data should be shuffled every epoch.

For example, we can define a DataLoader by passing in a selected sample of rows in the dataset.

... # create the dataset dataset = CSVDataset(...) # select rows from the dataset train, test = random_split(dataset, [[...], [...]]) # create a data loader for train and test sets train_dl = DataLoader(train, batch_size=32, shuffle=True) test_dl = DataLoader(test, batch_size=1024, shuffle=False)

Once defined, a DataLoader can be enumerated, yielding one batch worth of samples each iteration.

... # train the model for i, (inputs, targets) in enumerate(train_dl): ...

Step 2: Define the Model

The next step is to define a model.

The idiom for defining a model in PyTorch involves defining a class that extends the Module class.

The constructor of your class defines the layers of the model and the forward() function is the override that defines how to forward propagate input through the defined layers of the model.

Many layers are available, such as Linear for fully connected layers, Conv2d for convolutional layers, and MaxPool2d for pooling layers.

Activation functions can also be defined as layers, such as ReLU, Softmax, and Sigmoid.

Below is an example of a simple MLP model with one layer.

# model definition

class MLP(Module):

# define model elements

def __init__(self, n_inputs):

super(MLP, self).__init__()

self.layer = Linear(n_inputs, 1)

self.activation = Sigmoid()

# forward propagate input

def forward(self, X):

X = self.layer(X)

X = self.activation(X)

return X

The weights of a given layer can also be initialized after the layer is defined in the constructor.

Common examples include the Xavier and He weight initialization schemes. For example:

... xavier_uniform_(self.layer.weight)

Step 3: Train the Model

The training process requires that you define a loss function and an optimization algorithm.

Common loss functions include the following:

- BCELoss: Binary cross-entropy loss for binary classification.

- CrossEntropyLoss: Categorical cross-entropy loss for multi-class classification.

- MSELoss: Mean squared loss for regression.

For more on loss functions generally, see the tutorial:

Stochastic gradient descent is used for optimization, and the standard algorithm is provided by the SGD class, although other versions of the algorithm are available, such as Adam.

# define the optimization criterion = MSELoss() optimizer = SGD(model.parameters(), lr=0.01, momentum=0.9)

Training the model involves enumerating the DataLoader for the training dataset.

First, a loop is required for the number of training epochs. Then an inner loop is required for the mini-batches for stochastic gradient descent.

...

# enumerate epochs

for epoch in range(100):

# enumerate mini batches

for i, (inputs, targets) in enumerate(train_dl):

...

Each update to the model involves the same general pattern comprised of:

- Clearing the last error gradient.

- A forward pass of the input through the model.

- Calculating the loss for the model output.

- Backpropagating the error through the model.

- Update the model in an effort to reduce loss.

For example:

... # clear the gradients optimizer.zero_grad() # compute the model output yhat = model(inputs) # calculate loss loss = criterion(yhat, targets) # credit assignment loss.backward() # update model weights optimizer.step()

Step 4: Evaluate the model

Once the model is fit, it can be evaluated on the test dataset.

This can be achieved by using the DataLoader for the test dataset and collecting the predictions for the test set, then comparing the predictions to the expected values of the test set and calculating a performance metric.

...

for i, (inputs, targets) in enumerate(test_dl):

# evaluate the model on the test set

yhat = model(inputs)

...

Step 5: Make predictions

A fit model can be used to make a prediction on new data.

For example, you might have a single image or a single row of data and want to make a prediction.

This requires that you wrap the data in a PyTorch Tensor data structure.

A Tensor is just the PyTorch version of a NumPy array for holding data. It also allows you to perform the automatic differentiation tasks in the model graph, like calling backward() when training the model.

The prediction too will be a Tensor, although you can retrieve the NumPy array by detaching the Tensor from the automatic differentiation graph and calling the NumPy function.

... # convert row to data row = Variable(Tensor([row]).float()) # make prediction yhat = model(row) # retrieve numpy array yhat = yhat.detach().numpy()

Now that we are familiar with the PyTorch API at a high-level and the model life-cycle, let’s look at how we can develop some standard deep learning models from scratch.

3. How to Develop PyTorch Deep Learning Models

In this section, you will discover how to develop, evaluate, and make predictions with standard deep learning models, including Multilayer Perceptrons (MLP) and Convolutional Neural Networks (CNN).

A Multilayer Perceptron model, or MLP for short, is a standard fully connected neural network model.

It is comprised of layers of nodes where each node is connected to all outputs from the previous layer and the output of each node is connected to all inputs for nodes in the next layer.

An MLP is a model with one or more fully connected layers. This model is appropriate for tabular data, that is data as it looks in a table or spreadsheet with one column for each variable and one row for each variable. There are three predictive modeling problems you may want to explore with an MLP; they are binary classification, multiclass classification, and regression.

Let’s fit a model on a real dataset for each of these cases.

Note: The models in this section are effective, but not optimized. See if you can improve their performance. Post your findings in the comments below.

3.1. How to Develop an MLP for Binary Classification

We will use the Ionosphere binary (two class) classification dataset to demonstrate an MLP for binary classification.

This dataset involves predicting whether there is a structure in the atmosphere or not given radar returns.

The dataset will be downloaded automatically using Pandas, but you can learn more about it here.

We will use a LabelEncoder to encode the string labels to integer values 0 and 1. The model will be fit on 67 percent of the data, and the remaining 33 percent will be used for evaluation, split using the train_test_split() function.

It is a good practice to use ‘relu‘ activation with a ‘He Uniform‘ weight initialization. This combination goes a long way to overcome the problem of vanishing gradients when training deep neural network models. For more on ReLU, see the tutorial:

The model predicts the probability of class 1 and uses the sigmoid activation function. The model is optimized using stochastic gradient descent and seeks to minimize the binary cross-entropy loss.

The complete example is listed below.

# pytorch mlp for binary classification

from numpy import vstack

from pandas import read_csv

from sklearn.preprocessing import LabelEncoder

from sklearn.metrics import accuracy_score

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

from torch.utils.data import random_split

from torch import Tensor

from torch.nn import Linear

from torch.nn import ReLU

from torch.nn import Sigmoid

from torch.nn import Module

from torch.optim import SGD

from torch.nn import BCELoss

from torch.nn.init import kaiming_uniform_

from torch.nn.init import xavier_uniform_

# dataset definition

class CSVDataset(Dataset):

# load the dataset

def __init__(self, path):

# load the csv file as a dataframe

df = read_csv(path, header=None)

# store the inputs and outputs

self.X = df.values[:, :-1]

self.y = df.values[:, -1]

# ensure input data is floats

self.X = self.X.astype('float32')

# label encode target and ensure the values are floats

self.y = LabelEncoder().fit_transform(self.y)

self.y = self.y.astype('float32')

self.y = self.y.reshape((len(self.y), 1))

# number of rows in the dataset

def __len__(self):

return len(self.X)

# get a row at an index

def __getitem__(self, idx):

return [self.X[idx], self.y[idx]]

# get indexes for train and test rows

def get_splits(self, n_test=0.33):

# determine sizes

test_size = round(n_test * len(self.X))

train_size = len(self.X) - test_size

# calculate the split

return random_split(self, [train_size, test_size])

# model definition

class MLP(Module):

# define model elements

def __init__(self, n_inputs):

super(MLP, self).__init__()

# input to first hidden layer

self.hidden1 = Linear(n_inputs, 10)

kaiming_uniform_(self.hidden1.weight, nonlinearity='relu')

self.act1 = ReLU()

# second hidden layer

self.hidden2 = Linear(10, 8)

kaiming_uniform_(self.hidden2.weight, nonlinearity='relu')

self.act2 = ReLU()

# third hidden layer and output

self.hidden3 = Linear(8, 1)

xavier_uniform_(self.hidden3.weight)

self.act3 = Sigmoid()

# forward propagate input

def forward(self, X):

# input to first hidden layer

X = self.hidden1(X)

X = self.act1(X)

# second hidden layer

X = self.hidden2(X)

X = self.act2(X)

# third hidden layer and output

X = self.hidden3(X)

X = self.act3(X)

return X

# prepare the dataset

def prepare_data(path):

# load the dataset

dataset = CSVDataset(path)

# calculate split

train, test = dataset.get_splits()

# prepare data loaders

train_dl = DataLoader(train, batch_size=32, shuffle=True)

test_dl = DataLoader(test, batch_size=1024, shuffle=False)

return train_dl, test_dl

# train the model

def train_model(train_dl, model):

# define the optimization

criterion = BCELoss()

optimizer = SGD(model.parameters(), lr=0.01, momentum=0.9)

# enumerate epochs

for epoch in range(100):

# enumerate mini batches

for i, (inputs, targets) in enumerate(train_dl):

# clear the gradients

optimizer.zero_grad()

# compute the model output

yhat = model(inputs)

# calculate loss

loss = criterion(yhat, targets)

# credit assignment

loss.backward()

# update model weights

optimizer.step()

# evaluate the model

def evaluate_model(test_dl, model):

predictions, actuals = list(), list()

for i, (inputs, targets) in enumerate(test_dl):

# evaluate the model on the test set

yhat = model(inputs)

# retrieve numpy array

yhat = yhat.detach().numpy()

actual = targets.numpy()

actual = actual.reshape((len(actual), 1))

# round to class values

yhat = yhat.round()

# store

predictions.append(yhat)

actuals.append(actual)

predictions, actuals = vstack(predictions), vstack(actuals)

# calculate accuracy

acc = accuracy_score(actuals, predictions)

return acc

# make a class prediction for one row of data

def predict(row, model):

# convert row to data

row = Tensor([row])

# make prediction

yhat = model(row)

# retrieve numpy array

yhat = yhat.detach().numpy()

return yhat

# prepare the data

path = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/ionosphere.csv'

train_dl, test_dl = prepare_data(path)

print(len(train_dl.dataset), len(test_dl.dataset))

# define the network

model = MLP(34)

# train the model

train_model(train_dl, model)

# evaluate the model

acc = evaluate_model(test_dl, model)

print('Accuracy: %.3f' % acc)

# make a single prediction (expect class=1)

row = [1,0,0.99539,-0.05889,0.85243,0.02306,0.83398,-0.37708,1,0.03760,0.85243,-0.17755,0.59755,-0.44945,0.60536,-0.38223,0.84356,-0.38542,0.58212,-0.32192,0.56971,-0.29674,0.36946,-0.47357,0.56811,-0.51171,0.41078,-0.46168,0.21266,-0.34090,0.42267,-0.54487,0.18641,-0.45300]

yhat = predict(row, model)

print('Predicted: %.3f (class=%d)' % (yhat, yhat.round()))

Running the example first reports the shape of the train and test datasets, then fits the model and evaluates it on the test dataset. Finally, a prediction is made for a single row of data.

Your specific results will vary given the stochastic nature of the learning algorithm. Try running the example a few times.

What result did you get?

Can you change the model to do better?

Post your findings to the comments below.

In this case, we can see that the model achieved a classification accuracy of about 94 percent and then predicted a probability of 0.99 that the one row of data belong to class 1.

235 116 Accuracy: 0.948 Predicted: 0.998 (class=1)

3.2. How to Develop an MLP for Multiclass Classification

We will use the Iris flowers multiclass classification dataset to demonstrate an MLP for multiclass classification.

This problem involves predicting the species of iris flower given measures of the flower.

The dataset will be downloaded automatically using Pandas, but you can learn more about it here.

Given that it is a multiclass classification, the model must have one node for each class in the output layer and use the softmax activation function. The loss function is the cross entropy, which is appropriate for integer encoded class labels (e.g. 0 for one class, 1 for the next class, etc.).

The complete example of fitting and evaluating an MLP on the iris flowers dataset is listed below.

# pytorch mlp for multiclass classification

from numpy import vstack

from numpy import argmax

from pandas import read_csv

from sklearn.preprocessing import LabelEncoder

from sklearn.metrics import accuracy_score

from torch import Tensor

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

from torch.utils.data import random_split

from torch.nn import Linear

from torch.nn import ReLU

from torch.nn import Softmax

from torch.nn import Module

from torch.optim import SGD

from torch.nn import CrossEntropyLoss

from torch.nn.init import kaiming_uniform_

from torch.nn.init import xavier_uniform_

# dataset definition

class CSVDataset(Dataset):

# load the dataset

def __init__(self, path):

# load the csv file as a dataframe

df = read_csv(path, header=None)

# store the inputs and outputs

self.X = df.values[:, :-1]

self.y = df.values[:, -1]

# ensure input data is floats

self.X = self.X.astype('float32')

# label encode target and ensure the values are floats

self.y = LabelEncoder().fit_transform(self.y)

# number of rows in the dataset

def __len__(self):

return len(self.X)

# get a row at an index

def __getitem__(self, idx):

return [self.X[idx], self.y[idx]]

# get indexes for train and test rows

def get_splits(self, n_test=0.33):

# determine sizes

test_size = round(n_test * len(self.X))

train_size = len(self.X) - test_size

# calculate the split

return random_split(self, [train_size, test_size])

# model definition

class MLP(Module):

# define model elements

def __init__(self, n_inputs):

super(MLP, self).__init__()

# input to first hidden layer

self.hidden1 = Linear(n_inputs, 10)

kaiming_uniform_(self.hidden1.weight, nonlinearity='relu')

self.act1 = ReLU()

# second hidden layer

self.hidden2 = Linear(10, 8)

kaiming_uniform_(self.hidden2.weight, nonlinearity='relu')

self.act2 = ReLU()

# third hidden layer and output

self.hidden3 = Linear(8, 3)

xavier_uniform_(self.hidden3.weight)

self.act3 = Softmax(dim=1)

# forward propagate input

def forward(self, X):

# input to first hidden layer

X = self.hidden1(X)

X = self.act1(X)

# second hidden layer

X = self.hidden2(X)

X = self.act2(X)

# output layer

X = self.hidden3(X)

X = self.act3(X)

return X

# prepare the dataset

def prepare_data(path):

# load the dataset

dataset = CSVDataset(path)

# calculate split

train, test = dataset.get_splits()

# prepare data loaders

train_dl = DataLoader(train, batch_size=32, shuffle=True)

test_dl = DataLoader(test, batch_size=1024, shuffle=False)

return train_dl, test_dl

# train the model

def train_model(train_dl, model):

# define the optimization

criterion = CrossEntropyLoss()

optimizer = SGD(model.parameters(), lr=0.01, momentum=0.9)

# enumerate epochs

for epoch in range(500):

# enumerate mini batches

for i, (inputs, targets) in enumerate(train_dl):

# clear the gradients

optimizer.zero_grad()

# compute the model output

yhat = model(inputs)

# calculate loss

loss = criterion(yhat, targets)

# credit assignment

loss.backward()

# update model weights

optimizer.step()

# evaluate the model

def evaluate_model(test_dl, model):

predictions, actuals = list(), list()

for i, (inputs, targets) in enumerate(test_dl):

# evaluate the model on the test set

yhat = model(inputs)

# retrieve numpy array

yhat = yhat.detach().numpy()

actual = targets.numpy()

# convert to class labels

yhat = argmax(yhat, axis=1)

# reshape for stacking

actual = actual.reshape((len(actual), 1))

yhat = yhat.reshape((len(yhat), 1))

# store

predictions.append(yhat)

actuals.append(actual)

predictions, actuals = vstack(predictions), vstack(actuals)

# calculate accuracy

acc = accuracy_score(actuals, predictions)

return acc

# make a class prediction for one row of data

def predict(row, model):

# convert row to data

row = Tensor([row])

# make prediction

yhat = model(row)

# retrieve numpy array

yhat = yhat.detach().numpy()

return yhat

# prepare the data

path = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/iris.csv'

train_dl, test_dl = prepare_data(path)

print(len(train_dl.dataset), len(test_dl.dataset))

# define the network

model = MLP(4)

# train the model

train_model(train_dl, model)

# evaluate the model

acc = evaluate_model(test_dl, model)

print('Accuracy: %.3f' % acc)

# make a single prediction

row = [5.1,3.5,1.4,0.2]

yhat = predict(row, model)

print('Predicted: %s (class=%d)' % (yhat, argmax(yhat)))

Running the example first reports the shape of the train and test datasets, then fits the model and evaluates it on the test dataset. Finally, a prediction is made for a single row of data.

Your specific results will vary given the stochastic nature of the learning algorithm. Try running the example a few times.

What result did you get?

Can you change the model to do better?

Post your findings to the comments below.

In this case, we can see that the model achieved a classification accuracy of about 98 percent and then predicted a probability of a row of data belonging to each class, although class 0 has the highest probability.

100 50 Accuracy: 0.980 Predicted: [[9.5524162e-01 4.4516966e-02 2.4138369e-04]] (class=0)

3.3. How to Develop an MLP for Regression

We will use the Boston housing regression dataset to demonstrate an MLP for regression predictive modeling.

This problem involves predicting house value based on properties of the house and neighborhood.

The dataset will be downloaded automatically using Pandas, but you can learn more about it here.

This is a regression problem that involves predicting a single numeric value. As such, the output layer has a single node and uses the default or linear activation function (no activation function). The mean squared error (mse) loss is minimized when fitting the model.

Recall that this is regression, not classification; therefore, we cannot calculate classification accuracy. For more on this, see the tutorial:

The complete example of fitting and evaluating an MLP on the Boston housing dataset is listed below.

# pytorch mlp for regression

from numpy import vstack

from numpy import sqrt

from pandas import read_csv

from sklearn.metrics import mean_squared_error

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

from torch.utils.data import random_split

from torch import Tensor

from torch.nn import Linear

from torch.nn import Sigmoid

from torch.nn import Module

from torch.optim import SGD

from torch.nn import MSELoss

from torch.nn.init import xavier_uniform_

# dataset definition

class CSVDataset(Dataset):

# load the dataset

def __init__(self, path):

# load the csv file as a dataframe

df = read_csv(path, header=None)

# store the inputs and outputs

self.X = df.values[:, :-1].astype('float32')

self.y = df.values[:, -1].astype('float32')

# ensure target has the right shape

self.y = self.y.reshape((len(self.y), 1))

# number of rows in the dataset

def __len__(self):

return len(self.X)

# get a row at an index

def __getitem__(self, idx):

return [self.X[idx], self.y[idx]]

# get indexes for train and test rows

def get_splits(self, n_test=0.33):

# determine sizes

test_size = round(n_test * len(self.X))

train_size = len(self.X) - test_size

# calculate the split

return random_split(self, [train_size, test_size])

# model definition

class MLP(Module):

# define model elements

def __init__(self, n_inputs):

super(MLP, self).__init__()

# input to first hidden layer

self.hidden1 = Linear(n_inputs, 10)

xavier_uniform_(self.hidden1.weight)

self.act1 = Sigmoid()

# second hidden layer

self.hidden2 = Linear(10, 8)

xavier_uniform_(self.hidden2.weight)

self.act2 = Sigmoid()

# third hidden layer and output

self.hidden3 = Linear(8, 1)

xavier_uniform_(self.hidden3.weight)

# forward propagate input

def forward(self, X):

# input to first hidden layer

X = self.hidden1(X)

X = self.act1(X)

# second hidden layer

X = self.hidden2(X)

X = self.act2(X)

# third hidden layer and output

X = self.hidden3(X)

return X

# prepare the dataset

def prepare_data(path):

# load the dataset

dataset = CSVDataset(path)

# calculate split

train, test = dataset.get_splits()

# prepare data loaders

train_dl = DataLoader(train, batch_size=32, shuffle=True)

test_dl = DataLoader(test, batch_size=1024, shuffle=False)

return train_dl, test_dl

# train the model

def train_model(train_dl, model):

# define the optimization

criterion = MSELoss()

optimizer = SGD(model.parameters(), lr=0.01, momentum=0.9)

# enumerate epochs

for epoch in range(100):

# enumerate mini batches

for i, (inputs, targets) in enumerate(train_dl):

# clear the gradients

optimizer.zero_grad()

# compute the model output

yhat = model(inputs)

# calculate loss

loss = criterion(yhat, targets)

# credit assignment

loss.backward()

# update model weights

optimizer.step()

# evaluate the model

def evaluate_model(test_dl, model):

predictions, actuals = list(), list()

for i, (inputs, targets) in enumerate(test_dl):

# evaluate the model on the test set

yhat = model(inputs)

# retrieve numpy array

yhat = yhat.detach().numpy()

actual = targets.numpy()

actual = actual.reshape((len(actual), 1))

# store

predictions.append(yhat)

actuals.append(actual)

predictions, actuals = vstack(predictions), vstack(actuals)

# calculate mse

mse = mean_squared_error(actuals, predictions)

return mse

# make a class prediction for one row of data

def predict(row, model):

# convert row to data

row = Tensor([row])

# make prediction

yhat = model(row)

# retrieve numpy array

yhat = yhat.detach().numpy()

return yhat

# prepare the data

path = 'https://raw.githubusercontent.com/jbrownlee/Datasets/master/housing.csv'

train_dl, test_dl = prepare_data(path)

print(len(train_dl.dataset), len(test_dl.dataset))

# define the network

model = MLP(13)

# train the model

train_model(train_dl, model)

# evaluate the model

mse = evaluate_model(test_dl, model)

print('MSE: %.3f, RMSE: %.3f' % (mse, sqrt(mse)))

# make a single prediction (expect class=1)

row = [0.00632,18.00,2.310,0,0.5380,6.5750,65.20,4.0900,1,296.0,15.30,396.90,4.98]

yhat = predict(row, model)

print('Predicted: %.3f' % yhat)

Running the example first reports the shape of the train and test datasets, then fits the model and evaluates it on the test dataset. Finally, a prediction is made for a single row of data.

Your specific results will vary given the stochastic nature of the learning algorithm. Try running the example a few times.

What result did you get?

Can you change the model to do better?

Post your findings to the comments below.

In this case, we can see that the model achieved a MSE of about 82, which is an RMSE of about nine (units are thousands of dollars). A value of 21 is then predicted for the single example.

339 167 MSE: 82.576, RMSE: 9.087 Predicted: 21.909

3.4. How to Develop a CNN for Image Classification

Convolutional Neural Networks, or CNNs for short, are a type of network designed for image input.

They are comprised of models with convolutional layers that extract features (called feature maps) and pooling layers that distill features down to the most salient elements.

CNNs are best suited to image classification tasks, although they can be used on a wide array of tasks that take images as input.

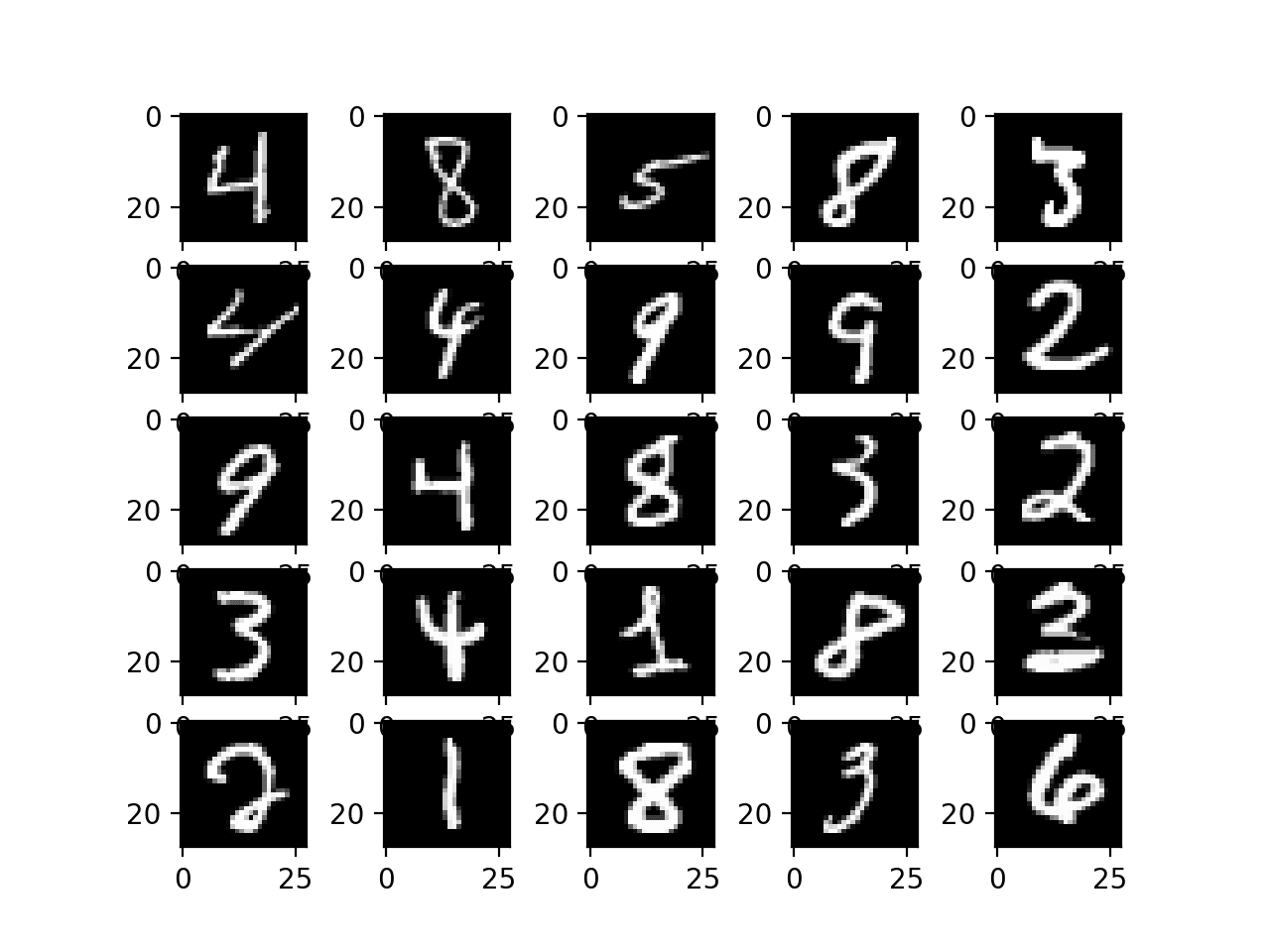

A popular image classification task is the MNIST handwritten digit classification. It involves tens of thousands of handwritten digits that must be classified as a number between 0 and 9.

The torchvision API provides a convenience function to download and load this dataset directly.

The example below loads the dataset and plots the first few images.

# load mnist dataset in pytorch from torch.utils.data import DataLoader from torchvision.datasets import MNIST from torchvision.transforms import Compose from torchvision.transforms import ToTensor from matplotlib import pyplot # define location to save or load the dataset path = '~/.torch/datasets/mnist' # define the transforms to apply to the data trans = Compose([ToTensor()]) # download and define the datasets train = MNIST(path, train=True, download=True, transform=trans) test = MNIST(path, train=False, download=True, transform=trans) # define how to enumerate the datasets train_dl = DataLoader(train, batch_size=32, shuffle=True) test_dl = DataLoader(test, batch_size=32, shuffle=True) # get one batch of images i, (inputs, targets) = next(enumerate(train_dl)) # plot some images for i in range(25): # define subplot pyplot.subplot(5, 5, i+1) # plot raw pixel data pyplot.imshow(inputs[i][0], cmap='gray') # show the figure pyplot.show()

Running the example loads the MNIST dataset, then summarizes the default train and test datasets.

Train: X=(60000, 28, 28), y=(60000,) Test: X=(10000, 28, 28), y=(10000,)

A plot is then created showing a grid of examples of handwritten images in the training dataset.

Plot of Handwritten Digits From the MNIST dataset

We can train a CNN model to classify the images in the MNIST dataset.

Note that the images are arrays of grayscale pixel data, therefore, we must add a channel dimension to the data before we can use the images as input to the model.

It is a good idea to scale the pixel values from the default range of 0-255 to have a zero mean and a standard deviation of 1. For more on scaling pixel values, see the tutorial:

The complete example of fitting and evaluating a CNN model on the MNIST dataset is listed below.

# pytorch cnn for multiclass classification

from numpy import vstack

from numpy import argmax

from pandas import read_csv

from sklearn.metrics import accuracy_score

from torchvision.datasets import MNIST

from torchvision.transforms import Compose

from torchvision.transforms import ToTensor

from torchvision.transforms import Normalize

from torch.utils.data import DataLoader

from torch.nn import Conv2d

from torch.nn import MaxPool2d

from torch.nn import Linear

from torch.nn import ReLU

from torch.nn import Softmax

from torch.nn import Module

from torch.optim import SGD

from torch.nn import CrossEntropyLoss

from torch.nn.init import kaiming_uniform_

from torch.nn.init import xavier_uniform_

# model definition

class CNN(Module):

# define model elements

def __init__(self, n_channels):

super(CNN, self).__init__()

# input to first hidden layer

self.hidden1 = Conv2d(n_channels, 32, (3,3))

kaiming_uniform_(self.hidden1.weight, nonlinearity='relu')

self.act1 = ReLU()

# first pooling layer

self.pool1 = MaxPool2d((2,2), stride=(2,2))

# second hidden layer

self.hidden2 = Conv2d(32, 32, (3,3))

kaiming_uniform_(self.hidden2.weight, nonlinearity='relu')

self.act2 = ReLU()

# second pooling layer

self.pool2 = MaxPool2d((2,2), stride=(2,2))

# fully connected layer

self.hidden3 = Linear(5*5*32, 100)

kaiming_uniform_(self.hidden3.weight, nonlinearity='relu')

self.act3 = ReLU()

# output layer

self.hidden4 = Linear(100, 10)

xavier_uniform_(self.hidden4.weight)

self.act4 = Softmax(dim=1)

# forward propagate input

def forward(self, X):

# input to first hidden layer

X = self.hidden1(X)

X = self.act1(X)

X = self.pool1(X)

# second hidden layer

X = self.hidden2(X)

X = self.act2(X)

X = self.pool2(X)

# flatten

X = X.view(-1, 4*4*50)

# third hidden layer

X = self.hidden3(X)

X = self.act3(X)

# output layer

X = self.hidden4(X)

X = self.act4(X)

return X

# prepare the dataset

def prepare_data(path):

# define standardization

trans = Compose([ToTensor(), Normalize((0.1307,), (0.3081,))])

# load dataset

train = MNIST(path, train=True, download=True, transform=trans)

test = MNIST(path, train=False, download=True, transform=trans)

# prepare data loaders

train_dl = DataLoader(train, batch_size=64, shuffle=True)

test_dl = DataLoader(test, batch_size=1024, shuffle=False)

return train_dl, test_dl

# train the model

def train_model(train_dl, model):

# define the optimization

criterion = CrossEntropyLoss()

optimizer = SGD(model.parameters(), lr=0.01, momentum=0.9)

# enumerate epochs

for epoch in range(10):

# enumerate mini batches

for i, (inputs, targets) in enumerate(train_dl):

# clear the gradients

optimizer.zero_grad()

# compute the model output

yhat = model(inputs)

# calculate loss

loss = criterion(yhat, targets)

# credit assignment

loss.backward()

# update model weights

optimizer.step()

# evaluate the model

def evaluate_model(test_dl, model):

predictions, actuals = list(), list()

for i, (inputs, targets) in enumerate(test_dl):

# evaluate the model on the test set

yhat = model(inputs)

# retrieve numpy array

yhat = yhat.detach().numpy()

actual = targets.numpy()

# convert to class labels

yhat = argmax(yhat, axis=1)

# reshape for stacking

actual = actual.reshape((len(actual), 1))

yhat = yhat.reshape((len(yhat), 1))

# store

predictions.append(yhat)

actuals.append(actual)

predictions, actuals = vstack(predictions), vstack(actuals)

# calculate accuracy

acc = accuracy_score(actuals, predictions)

return acc

# prepare the data

path = '~/.torch/datasets/mnist'

train_dl, test_dl = prepare_data(path)

print(len(train_dl.dataset), len(test_dl.dataset))

# define the network

model = CNN(1)

# # train the model

train_model(train_dl, model)

# evaluate the model

acc = evaluate_model(test_dl, model)

print('Accuracy: %.3f' % acc)

Running the example first reports the shape of the train and test datasets, then fits the model and evaluates it on the test dataset.

Your specific results will vary given the stochastic nature of the learning algorithm. Try running the example a few times.

What result did you get?

Can you change the model to do better?

Post your findings to the comments below.

In this case, we can see that the model achieved a classification accuracy of about 98 percent on the test dataset. We can then see that the model predicted class 5 for the first image in the training set.

60000 10000 Accuracy: 0.985

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

- Deep Learning, 2016.

- Programming PyTorch for Deep Learning: Creating and Deploying Deep Learning Applications, 2018.

- Deep Learning with PyTorch, 2020.

- Deep Learning for Coders with fastai and PyTorch: AI Applications Without a PhD, 2020.

PyTorch Project

- PyTorch Homepage.

- PyTorch Documentation

- PyTorch Installation Guide

- PyTorch, Wikipedia.

- PyTorch on GitHub.

APIs

Summary

In this tutorial, you discovered a step-by-step guide to developing deep learning models in PyTorch.

Specifically, you learned:

- The difference between Torch and PyTorch and how to install and confirm PyTorch is working.

- The five-step life-cycle of PyTorch models and how to define, fit, and evaluate models.

- How to develop PyTorch deep learning models for regression, classification, and predictive modeling tasks.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

The post PyTorch Tutorial: How to Develop Deep Learning Models with Python appeared first on Machine Learning Mastery.