Author: Andrea Manero-Bastin

This article was written by Hunter Heidenreich.

Looking into what a generative adversarial network is to understand how they work.

What’s in a Generative Model?

Before we even think about starting to talk about Generative Adversarial Networks (GANs), it is worth asking the question of what’s in a generative model? Why do we even want to have such a thing? What is the goal? These questions can help seed our thought process to better engage with GANs.

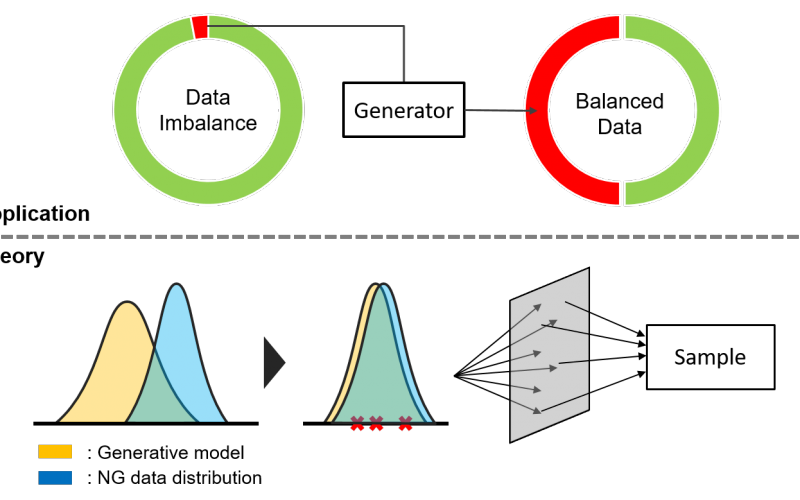

So why do we want a generative model? Well, it’s in the name! We wish to generate something. But what do we wish to generate? Typically, we wish to generate data (I know, not very specific). More than that though, it is likely that we wish to generate data that is never before seen, yet still fits into some data distribution (i.e. some pre-defined data set that we have already set aside).

And the goal of such a generative model? To get so good at coming up with new generated content that we (or any system that is observing the samples) can no longer tell the difference between what is original and what is generated. Once we have a system that can do that much, we are free to begin generating up new samples that we haven’t even seen before, yet still are believably real data.

To step into things a little deeper, we want our generative model to be able to accurately estimate the probability distribution of our real data. We will say that if we have a parameter W, we wish to find the parameter W that maximizes the likelihood of real samples. When we train our generative model, we find this ideal parameter W such that we minimize the distance between our estimate of what the data distribution is and the actual data distribution.

A good measure of distance between distributions is the Kullback-Leibler Divergence, and it is shown that maximizing the log likelihood is equivalent to minimizing this distance. Taking our parameterized, generative model and minimizing the distance between it and the actual data distribution is how we create a good generative model. It also brings us to a branching of two types of generative models.

Explicit Distribution Generative Models

An explicit distribution generative model comes up with an explicitly defined generative model distribution. It then refines this explicitly defined, parameterized estimation through training on data samples. An example of an explicit distribution generative model is a Variational Auto-Encoder (VAE). VAEs require an explicitly assumed prior distribution and likelihood distribution to be given to it. They use these two components to come up with a “variational approximation” with which to evaluate how they are performing. Because of these needs and this component, VAEs have to be explicitly distributed.

Implicit Distribution Generative Models

Much like you may have already put together, implicitly distributed generative models do not require an explicit definition for their model distribution. Instead, these models train themselves by indirectly sampling data from their parameterized distribution. And as you may have also already guessed, this is what a GAN does.

Well, how exactly does it do that? Let’s dive a little bit into GANs and then we’ll start to paint that picture.

High-Level GAN Understanding

Generative Adversarial Networks have three components to their name. We’ve touched on the generative aspect and the network aspect is pretty self-explanatory. But what about the adversarial portion?

Well, GAN’s have two components to their network, a generator (G) and a discriminator (D). These two components come together in the network and work as adversaries, pushing the performance of one another.

The Generator

The generator is responsible for producing fake examples of data. It takes as input some latent variable (which we will refer to as z) and outputs data that is of the same form as data in the original data set.

Latent variables are hidden variables. When talking about GANs we have this notion of a “latent space” that we can sample from. We can continuously slide through this latent space which, when you have a well-trained GAN, will have substantial (and oftentimes somewhat understandable effects) on the output.

If our latent variable is z and our target variable is x, we can think of the generator of network as learning a function that maps from z (the latent space) to x (hopefully, the real data distribution).

The Discriminator

The discriminator’s role is to discriminate. It is responsible for taking in a list of samples and coming up with a prediction for whether or not a given sample is real or fake. The discriminator will output a higher probability if it believes a sample is real.

We can think of our discriminator as a “bullshit detector” of sorts.

Adversarial Competition

These two components come together and battle it out. The generator and discriminator oppose one another, trying to maximize opposite goals: The generator wants to push to create samples that look more and more real and the discriminator wishes to always correctly classify where a sample comes from.

The fact that these goals are directly opposite one another is where GANs get the adversarial portion of their name.

To read the rest of the article, with illustrations, click here.

DSC Resources

- Book and Resources for DSC Members

- Comprehensive Repository of Data Science and ML Resources

- Advanced Machine Learning with Basic Excel

- Difference between ML, Data Science, AI, Deep Learning, and Statistics

- Selected Business Analytics, Data Science and ML articles

- Hire a Data Scientist | Search DSC | Find a Job

- Post a Blog | Forum Questions